Context Engineering for Large Language Models: A Comprehensive Survey

Context Engineering Technical Framework

Context Engineering Technical FrameworkResearch Background

The performance of Large Language Models (LLMs) is fundamentally determined by the quality of contextual information provided during inference. This comprehensive survey introduces Context Engineering as a formal discipline that transcends simple prompt design to encompass systematic optimization of information payloads for LLMs.

Core Contributions

🏗️ Theoretical Framework

- Comprehensive Taxonomy: Decomposes context engineering into foundational components and sophisticated system implementations

- Technical Roadmap: Establishes clear development pathways for the field

- Unified Framework: Provides a cohesive theoretical foundation for researchers and engineers advancing context-aware AI

📊 Large-Scale Literature Analysis

- 1300+ Research Papers systematically analyzed

- 1401 Citations comprehensively organized

- 165 Pages of detailed technical survey

🔍 Key Findings

Reveals a fundamental asymmetry in model capabilities:

- ✅ Strong Understanding: Current models excel at comprehending complex contexts

- ❌ Limited Generation: Pronounced limitations in generating equally sophisticated long-form outputs

Technical Architecture

Foundational Components

- Context Retrieval & Generation: Methods for acquiring and creating relevant contextual information

- Context Processing: Techniques for analyzing, filtering, and structuring contextual data

- Context Management: Strategies for organizing, storing, and maintaining context across interactions

System Implementations

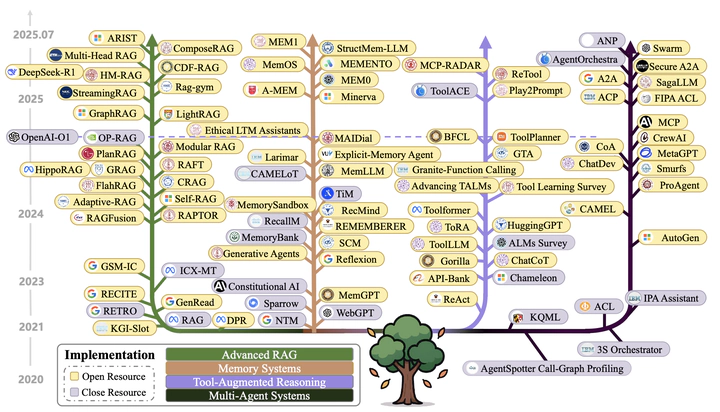

- Retrieval-Augmented Generation (RAG): Integrating external knowledge retrieval with generation

- Memory Systems & Tool-Integrated Reasoning: Persistent context storage and tool utilization

- Multi-Agent Systems: Collaborative context sharing and distributed reasoning

Research Impact

🎯 Theoretical Significance

- First systematic definition of Context Engineering as a formal discipline

- Comprehensive technical taxonomy establishment

- Identification of critical bottlenecks in LLM capability development

🚀 Practical Applications

- Guidelines for AI system design and implementation

- Advancement of RAG, multi-agent, and memory-augmented technologies

- Promotion of context-aware AI in production environments

🔮 Future Directions

- Long-Form Generation: Addressing limitations in generating sophisticated extended outputs

- Context Optimization: Enhancing information payload quality and efficiency

- System Integration: Advancing complex AI system architecture and implementation

Recognition & Impact

📈 Academic Recognition:

- #1 Paper of the day on Hugging Face Papers

- 64+ upvotes with sustained community engagement

- Featured in multiple research collections

🌟 Industry Value: Provides systematic engineering methodologies for LLM applications, offering crucial guidance for enterprise-level AI system development and production deployment.

🔗 Community Engagement:

- Ongoing collaboration with 165 pages of comprehensive content

- Open research initiative with 1401 citations

- Active GitHub repository for reproducible research

“Context Engineering represents more than a technical challenge—it embodies the core methodology for AI system design. This survey establishes a fundamental foundation for building next-generation intelligent systems.”

— STAIR Research Group